Systems based on facial biometrics became extremely common during the pandemic, a period when social distancing was necessary and in-person presence in specific environments became impractical as a security measure. As a result, the facial recognition market has reached values close to US$3.7 billion by 2020 and is expected to grow by more than 21% by 2026. As with every major new development in the security market, this explosion of systems based on facial biometrics has been followed by new and increasingly sophisticated forms of fraud. To understand a little more about this problem, let’s first comprehend how a traditional facial recognition system works.

How does it work?

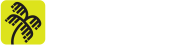

The typical flow of a face recognition system can be divided into two steps: liveness and face match. The first stage, liveness, was first described by Dorothy E. Denning in 2001 in an article where she stated that the most important thing for biometric systems “is liveness, not secrecy”. In other words, Dorothy points out that users should not worry about keeping their biometric information secret because anyone can see our faces on the street or have access to our pictures on social networks. It is the system that should be able to recognize and validate that information as being true or not. This is how liveness became the primary fraud detector in biometric systems, responsible for identifying forgery attempts in any biometric system, from the oldest fingerprint recognition systems to the new facial recognition systems.

But the process doesn’t stop at liveness: after the photo is validated and accepted as authentic, it is forwarded to the face match. The goal of the face match is only to answer if that face belongs to the person it says it is, and it will do this regardless of how the photo was obtained. So a system that is considered secure must have a liveness step that does the correct validations and does not point to forgeries as real people’s pictures.

So what are the vulnerabilities of liveness?

There are several techniques developed, from the simplest to the most complex, to bypass liveness detection and make the system believe that the photo is from a real person and not a fake. Others work more as a way to use additional techniques, and there are also social engineering strategies that have little to do with fooling the system itself but rather with manipulating the procedures.

Digital or printed photos and videos

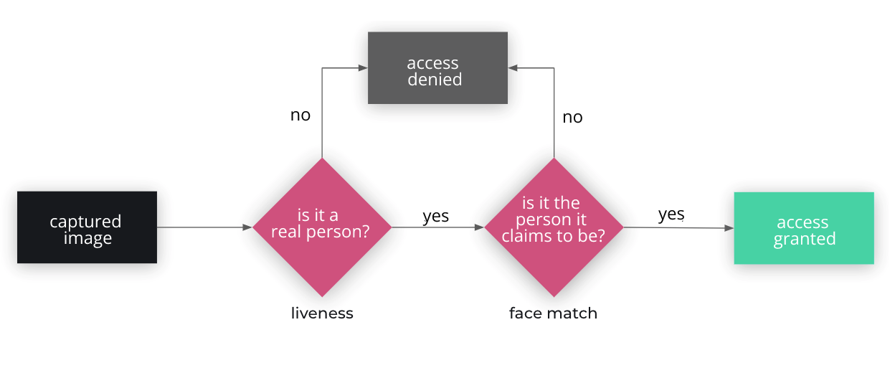

One of the simplest ways to fraud a facial biometrics system is by using the application to take pictures of other pictures or extract images from videos. In general, systems try to deal with this type of situation by creating reflections that are easily identifiable in the images by using flash or increasing the brightness of the cell phone. This, however, is not an extremely effective strategy since the fraudster can manually control the brightness of the device. Without this brightness, it is unlikely that any reflections will be noticed.

Furthermore, photos taken from a monitor with a high resolution by a low camera resolution device will probably never be identified as a forgery attempt.

Printed 2D Mask

Another extremely simple way, but one that can be surprisingly effective, is the use of 2D printed masks with holes in the eyes and mouth. Many liveness systems are based on challenges such as blinking or smiling and consider the simple act of fulfilling these challenges correctly a valid proof of life. Thus, the holes allow the challenges performed by the fraudster to be correctly perceived and validated by the system, and with the mask, the victim’s face will most likely be successfully recognized in the next step: the so-called face match.

Hyper-realistic 3D Mask

Following the same idea as the previous method but with a much higher level of sophistication, realistic and hyper-realistic 3D masks can cost vast sums of money and are mainly used for attacks directed at a particular target. These masks allow you to perform challenges such as blinking and smiling, but they can also mimic skin texture and pass through even more robust systems.

The Old man silicone mask by Metamorphose masks Making and Unmasking

Deepfake

With the advance in artificial intelligence, especially in Machine Learning and Deep Learning, the so-called deepfakes have emerged. This technique uses neural networks that are able to match up lines and faces with pre-existing videos, resulting in videos of people doing things they have never done or saying things they have never said.

Like everything in technology, its popularity has been accompanied by advances in the field that have improved the results and made it easier to use through open-source software such as Faceswap. Thus, the use of deep fakes became a problem in many areas, and the fake videos started to be used to attack, mainly politicians and celebrities. In the world of scams, this technique is especially used to spoof entire videos of victims performing the challenges proposed by liveness systems from a single photo, which can easily be obtained through social networks.

You Won’t Believe What Obama Says In This Video! 😉

Device compromise

While device compromise is not a way to circumvent liveness by itself, it serves as a means for other techniques to do so. For example, on Android devices, there is the possibility of injecting video frames into the device’s camera using applications such as Fake Camera or Forgery Camera. Some device compromise detection techniques, checking for the presence of suspicious apps, trying to detect if root access is enabled or if the app is being analyzed using hook techniques, can help assess the risks of a picture having been taken illegitimately.

Social Engineering

Some frauds do not involve a lot of technical knowledge and focus much more on ways to cheat the procedure of taking the photos through social engineering. In order to do this, fraudsters contact the victims by confirming their data and claiming to be from a company interested in sending them gifts. With the secured data, which are probably sourced from major leaks, the fraudsters take the gifts to the victim and say they need facial recognition to confirm receipt. Obviously, the recognition used has nothing to do with the gifts and usually serves to release funding or gain access to the user’s account.

Ok, so how to mitigate it?

Besides the simpler mitigations already present in almost all existing liveness systems, such as changing the brightness index or implementing standard challenges, there are others less common with the potential to prevent fraud even further.

Device Analysis

Device analysis aims to detect patterns that may indicate potentially dangerous devices independent of biometrics itself. This technique checks a number of characteristics to identify if the device performing the transaction is known and trusted or if it is a brand new device that has never been seen before and has potential fraud characteristics such as suspicious applications, unlocked root access, etc.

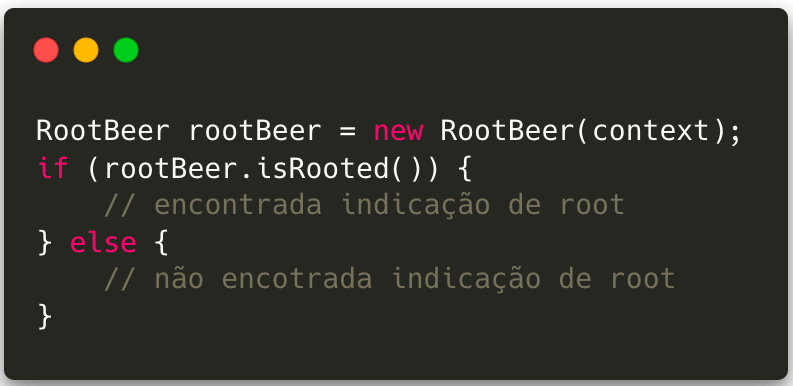

There are some good alternatives of third-party libraries and even native APIs for mobile devices that help with this analysis. On Android, the open-source library RootBeer is able to identify devices that have authorized root access, allowing fraudsters more freedom to manipulate internal data on the device.

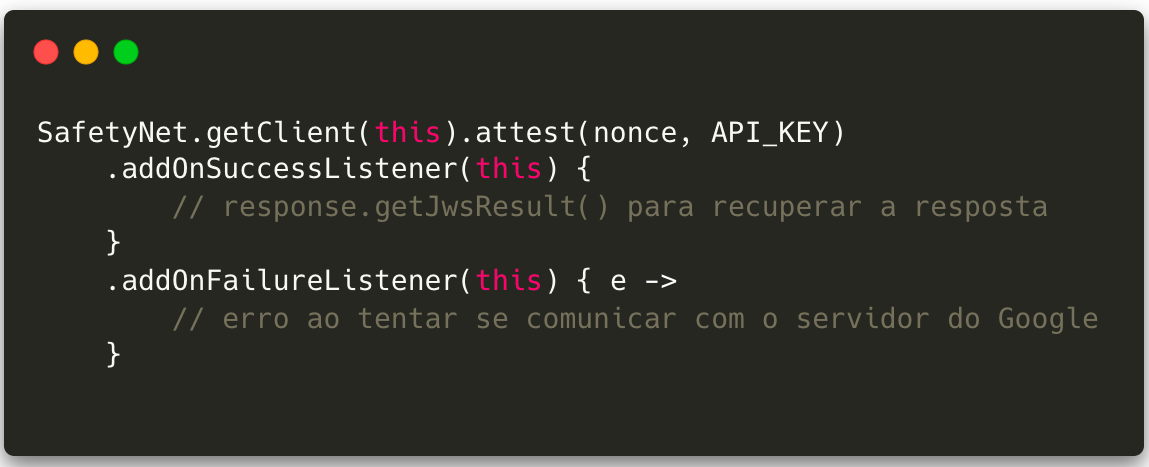

In addition, Google provides the SafetyNet API, which offers ways to identify whether the device has potentially harmful applications (API SafetyNet Verify Apps), as well as testing whether it is a genuine device by scanning the software and hardware for integrity issues and comparing it to already approved reference devices (API SafetyNet Attestation).

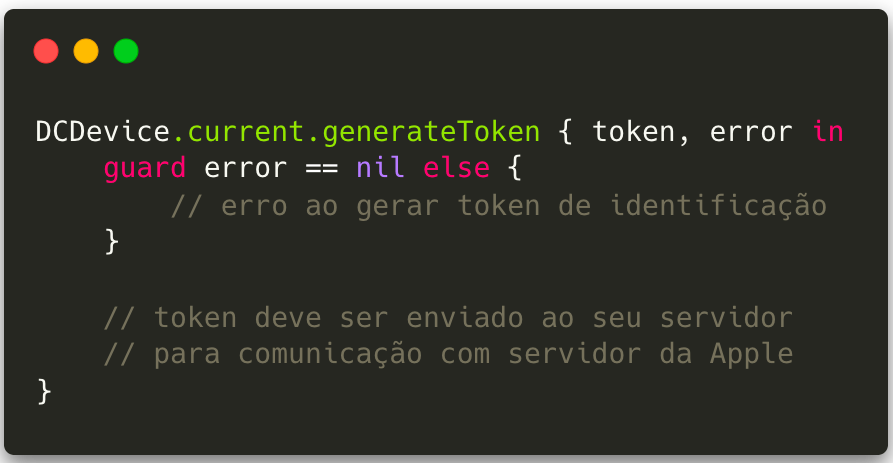

In iOS, the IOSSecuritySuite library provides ways to detect jailbreak, debugger attempts, reverse engineering, integrity breach, and function hook. In addition, the native DeviceCheck framework has two interesting functions for this type of situation:

- The device identification function allows developers to store up to two bits of data per device, meaning that it is possible to mark a device as potentially dangerous and recover that marking even in the event of a factory reset;

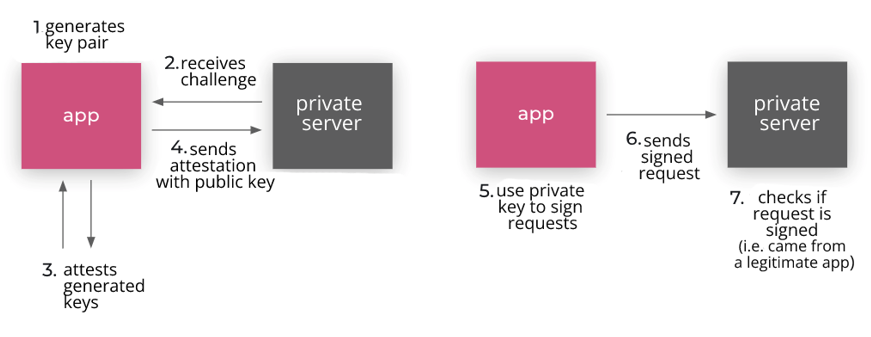

2. App Attest has a similar goal to SafetyNet Attestation but does so by creating a pair of hardware-based cryptographic keys certified by Apple’s servers. With the keys generated and certified as coming from a trusted application and device, it is possible to sign the requests sent to the server and guarantee that they come from legitimate instances of the application.

In addition to the options above, there are also proprietary frameworks such as AllowMe that combine these and many other security practices and contextual analysis to ensure that the device is legitimate. Although not focused on preventing liveness fraud, this type of analysis can identify fraudulent actions in many different types of transactions and can be interesting to avoid scams based on social engineering or device compromise.

Challenges

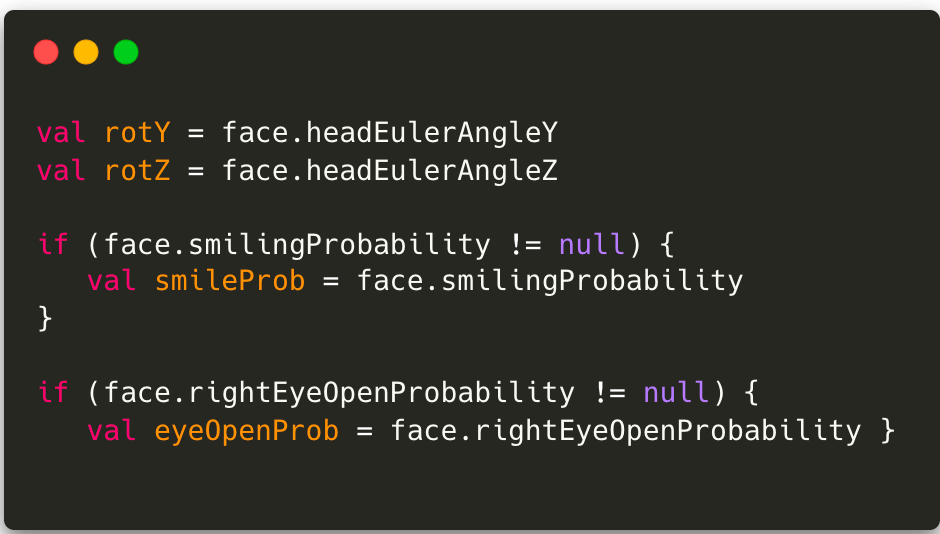

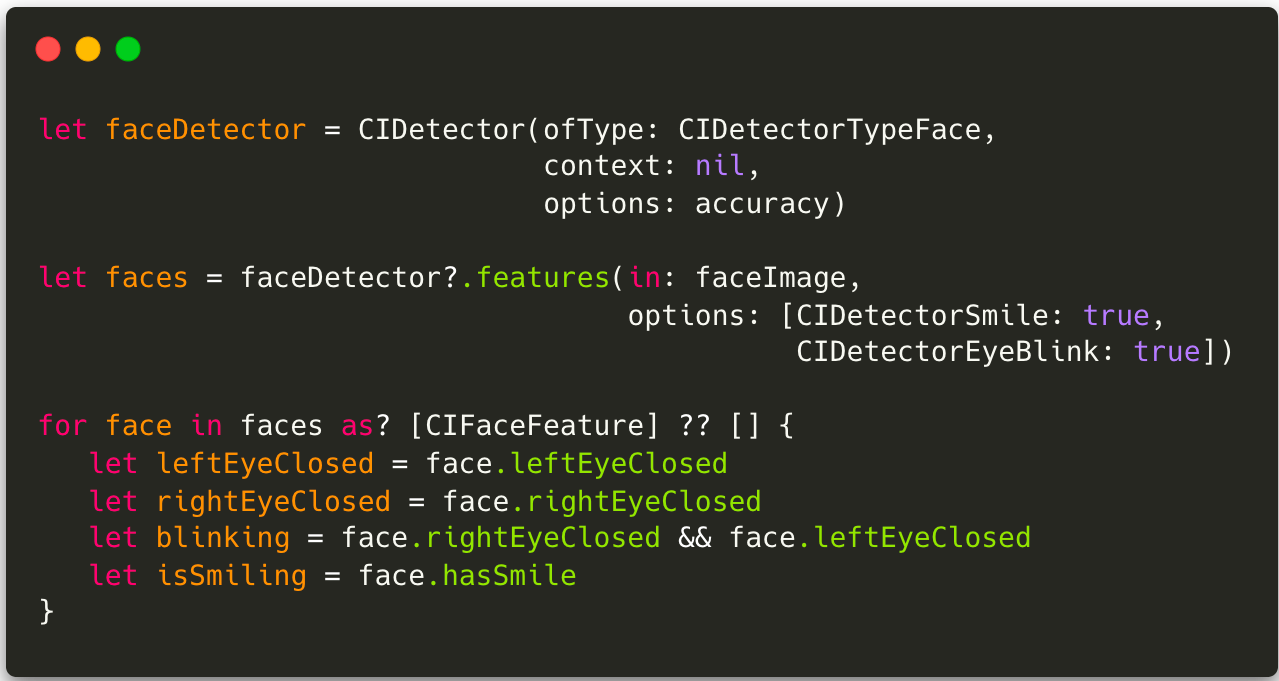

Challenges are common in liveness systems and work as an additional layer of difficulty, preventing static photos from being used for forgery. The main platforms (Android and iOS) offer ready-made solutions to identify movements such as blinking, smiling, or turning the head in mobile systems.

Also, there are libraries such as OpenCV, which specialize in image and video processing for even more complex challenges.

It’s worth mentioning, however, that the biggest problem associated with this proof-of-life technique is to trust that just the act of meeting the challenge is enough to validate the user as a real person since that image can come from a video where the person performs simple movements with their face. One way to make this practice more difficult is to generate challenges dynamically, meaning that from a set of specific challenges, we can always ask for a different challenge at each moment. This type of conduct prevents fraudsters from using pre-recorded videos of victims, for example.

Deepfakes Detection

Since this is still a very new technique, it’s common for videos created from deepfakes to have small inconsistencies that often go unnoticed by human eyes but can be easily detected by trained computers or Machine Learning techniques. There are some main points that liveness systems usually analyze to stop videos created by deep fakes: blinking or lip movements that are inconsistent or disordered; videos in low resolution or with different resolution areas; cheeks and forehead with very smooth or wrinkled skin, incompatible with the rest of the face; shadows in unusual positions, among others.

Being a very new and emerging technology, the detection algorithms are still being actively built. Recently the Deepfake Detection Challenge, a challenge that brought together AWS, Facebook, Microsoft, AI’s Media Integrity Steering Committee, and academics from around the world, paid out $1 million to the algorithm that was able to detect this type of fakery most accurately. The winning model is available on Github.

Although we are not as efficient at detecting fraud as computers, MIT has built a website where humans can test their ability to identify deepfakes: DeepFakes, Can You Spot Them?

Texture Analysis

To some degree, several texture analysis algorithms can differentiate skin textures from the texture of images taken from a photo, video, or mask. An evolution of this mitigation described in some scientific papers is the analysis of blood flow based on the small color variations in the skin generated by the heartbeat, capable of identifying even attacks with hyper-realistic 3D masks that can mimic skin texture.

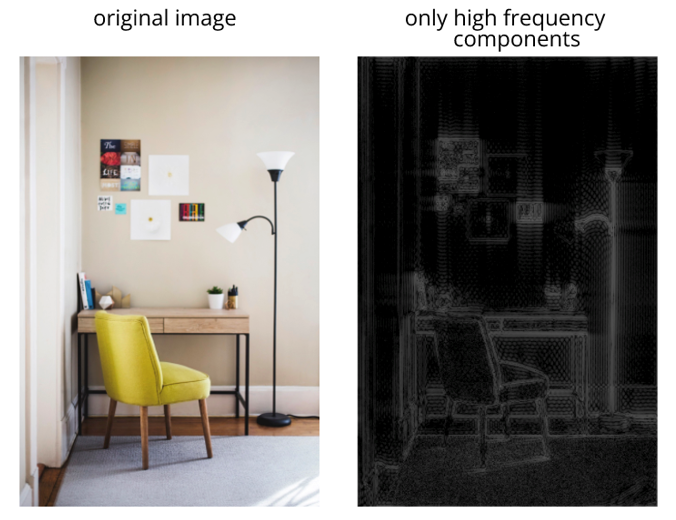

Although blood flow analysis is still very complex, several simpler algorithms are already able to differentiate basic textures, making more common attacks like those with paper masks impossible. Generally, the starting point of most of these algorithms is the Fourier Transform, which allows you to pass images from the spatial domain to the frequency domain, i.e., you can analyze the image according to the color change rate of the pixels. From the Fourier Transform, we can say, for example, that images that have many high-frequency components have better-defined edges since this type of component is linked to a greater color variation of pixels in a smaller space. The results of removing the low-frequency components show that by keeping only the high-frequency components in an image, we retain only the edges, as we can see in the images below.

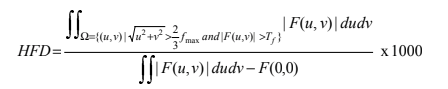

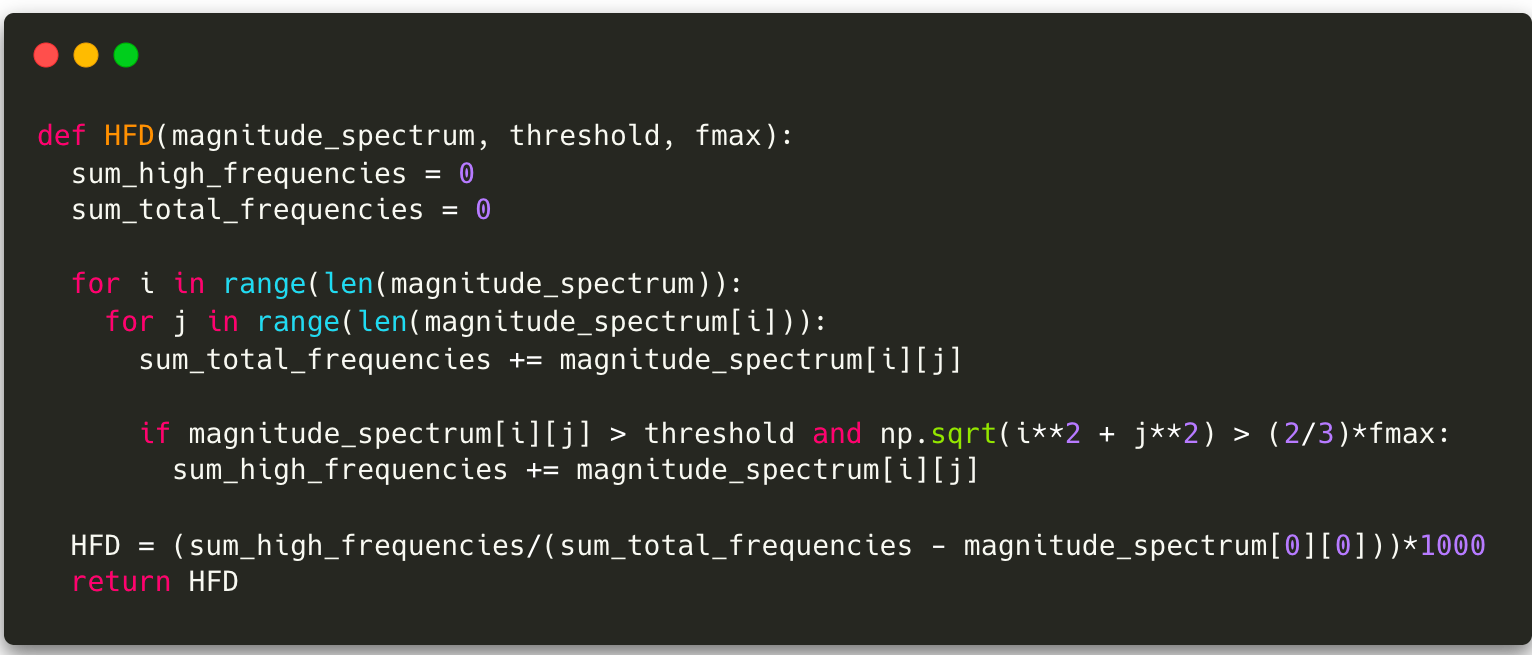

This kind of interpretation, which can be performed on images in the frequency domain, is the basis of many image processing and texture analysis algorithms, such as HFD, proposed in a paper called Live face detection based on the analysis of Fourier spectra. According to the article, the way light is distributed on 2D surfaces, usually used for spoofing, causes these images to have fewer high-frequency components than real images. From this observation came the equation for calculating the high-frequency descriptor (HFD):

Although HFD is an extremely simple computation, it is capable of identifying even coarser spoofing attempts and is not very complex. Thus it can be performed even on mobile devices, unlike deep learning and machine learning algorithms that require a lot of processing power. There are also even more efficient algorithms that, like HFD, use the Fourier Transform. However, it’s worth pointing out that these algorithms generally work at the pixel level and are therefore strongly influenced by the image quality and lighting conditions.

Structural Analysis

The major goal of structural analysis is to try to differentiate between images taken from real people in 3D and spoof attempts with pictures taken from 2D media. This analysis is typically used by systems like Face ID that use special cameras and sensors to create a detailed 3D map of a person’s face.

On iOS mobile devices, it’s possible to use ARKit, a framework often used in the development of augmented reality apps and games. ARKit is capable of identifying 3D structures, such as a human face, although it can still be easily fooled by an ultra-realistic silicone mask. On Android, ARCore Augmented Faces has a similar function and is also a native alternative for this task.

These technologies, however, are not widely available on all cell phones. Thus some algorithms try to deal with this technological limitation to extract structure differences in these two image types. Methods such as the one proposed in the article Face Spoof Detection with Image Distortion Analysis use an algorithm for extracting the specular component of the image, i.e., the reflection component of the image. According to this article, it’s expected that images captured from 2D media will present a different pattern of reflections than those obtained by a legitimate photo captured from a real face in 3D. In Face Liveness Detection From a Single Image via Diffusion Speed Model, diffusion speed is used with the premise that the illumination on 2D surfaces is uniformly distributed and therefore should diffuse slowly with the application of the algorithm. In contrast, 3D surfaces have a higher “diffusion speed” since the illumination on these surfaces is not uniform.

Conclusion

Finally, it’s worth remembering that, as with everything in the world of cybersecurity and anti-fraud, there is no “silver bullet” that can end the problems involving facial biometrics. However, considering implementation costs and available resources, the best approach is to blend as many mitigation techniques as possible to create a robust and secure product for the system in which it will be applied.

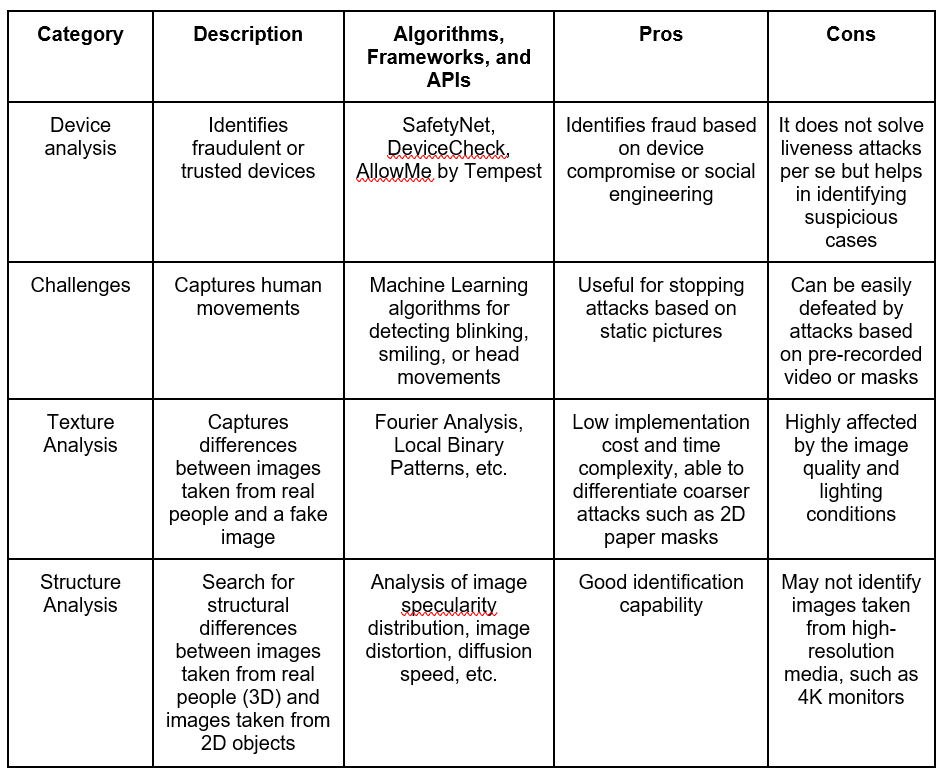

The table below is based on the summary in the article Face Liveness Detection Using a Flash against 2D Spoofing Attack and compiles the methods presented here, their main algorithms, frameworks, and APIs, and the pros and cons of each.

References

AllowMe, Análise de dispositivos: por que ela é mais eficiente do que dados cadastrais, available at https://www.allowme.cloud/conteudoallowme-analise-de-dispositivos-por-que-ela-e-mais-eficiente-do-que-dados-cadastrais/index.html, accessed on 04 April 2022

Apple Docs, DeviceCheck, available at https://developer.apple.com/documentation/devicecheck, accessed on 04 April 2022

Dorothy E. Denning, Why I Love Biometrics – It’s “liveness”, not secrecy, that counts.

Google Docs, Proteger-se contra ameaças de segurança com SafetyNet, available at https://developer.android.com/training/safetynet, accessed on 04 April 2022

iOSSecuritySuite, https://github.com/securing/IOSSecuritySuite, accessed on 04 April 2022

Jiangwei Li, Yunhong Wang, Tieniu Tan, and Anil K. Jain “Live face detection based on the analysis of Fourier spectra”, Proc. SPIE 5404, Biometric Technology for Human Identification, 2004

Kevin Tussy, John Wojewidka, and Josh Rose, https://liveness.com/, accessed on 04 April 2022

MIT Media Lab, DeepFakes, Can You Spot Them? available at https://detectfakes.media.mit.edu, accessed on 04 April 2022

K. Chan et al., “Face Liveness Detection Using a Flash Against 2D Spoofing Attack,” in IEEE Transactions on Information Forensics and Security, 2018

Rootbeer, https://github.com/scottyab/rootbeer, accessed on 04 April 2022

Unnikrishnan and A. Eshack, “Face spoof detection using image distortion analysis and image quality assessment,” 2016 International Conference on Emerging Technological Trends (ICETT), 2016

Seferbekov and E. Lee, DeepFake Detection (DFDC) Solution by @selimsef, available at https://github.com/selimsef/dfdc_deepfake_challenge, accessed on 04 April 2022

Kim, S. Suh, and J. -J. Han, “Face Liveness Detection From a Single Image via Diffusion Speed Model,” in IEEE Transactions on Image Processing, 2015